Leah Campbell

Leah Campbell is a student at Yale University currently completing her research at USGS Menlo Park under Dr. Rufus Catchings.

This summer I will be at the USGS’s Earthquake Science Center in Menlo Park, CA. Although I will be helping out with fieldwork throughout the summer for other projects (including a possible survey for geothermal energy in Nevada), my main project will focus on the San Gregorio Fault Zone where it comes onshore at Point Ano Nuevo, here in California. We will be doing a high resolution seismic imaging survey across the fault, in order to better understand and visualize the subsurface in the area. Then, through data processing, primarily with the ProMax program, we will interpret the resulting images of the subsurface to better understand the mechanisms and actual location of different strands of the fault zone. These images can also be used to assess the earthquake risk along the fault, as well as determine the location of the water table and groundwater sources in the area.

Final day- wrapping it all up

August 16th, 2012

So today is the final day of the final week of my internship and what a summer it’s been! I may still come in on Monday for some last minute things, since I don’t go back to school for another week, but I wanted a few last days at home just to relax since my grandparents are around. Plus, I’m still not done exploring California and this weekend I’m heading up to Lake Tahoe!

As I expected when the summer got off to a slow start, this week has, inevitably, been pretty busy. I wasn’t able to finish the S-wave processing, but I was able to get it to a good stopping point so someone else on the team can pick it up once I’m gone. I did get through all of the preprocessing- stacking repeated shots, killing bad shots, creating fake geometry, creating log files, shifting first breaks, inputting real geometry etc…- and I’ll admit I’m not too disappointed that I don’t have time to do first break picks again! And of course, since I won't be back in this office again, I had to completely reorganize my life. After 11 weeks, both my computer and my desk were a total mess! I made sure to email myself anything I could possibly need over the semester, and tried to put together all of my files and notes for the next person picking up on this data set. I was also able to sit down with my advisor and another guy on the team and go through some old posters of theirs and talk through what I’ll want and need to do leading up to AGU. Never having done a poster before, I can’t explain what a relief it was for me to get a sense of what exactly is expected of me come December! Since I can’t remotely access the USGS server and continue processing data, my job this semester will be just writing everything up. My advisor is going to help me transfer figures off from ProMax, onto Illustrator, and then email them so I can make them poster ready. It means I’ll still have to figure out Adobe Illustrator once I’m back at school so I can manipulate the images, but it also means he’ll be able to go through everything I want to put on the poster beforehand.

Now, just to look over some of my goals I had had at the beginning of the summer-

I wanted to become confident and self-sufficient with ProMax and Unix. Of course I still had to ask others a few questions once and a while, and I was constantly googling Unix syntax, but for the most part I think I definitely achieved this goal. The S-wave preprocessing, which, at the beginning of the summer would have taken me forever since I had 489 shots to grapple with, went much quicker than I expected; it even went much faster than P-wave preprocessing. On top of that, for the past few weeks, I’ve had to teach most of what I’ve been learning to another student in the office who was away for some time. For me at least, having to teach someone something always solidifies my own understanding of it.

I also wanted to become more comfortable with going out into the field. After we did my line in June, I haven’t had to organize any of our fieldwork, but I’ve had the chance to go out on five different occasions and by now, I feel more than confident showing newcomers or volunteers how and why we do things the way we do. Plus, I don’t feel completely lost in the warehouse anymore and I think I could explain reasonably well to anyone who asked what each tool we use is for and why we bring what we bring into the field. All in all, I think another success!

My other goals and hopes, in general, all had to do with taking what I’ve learnt this summer and applying it to other situations, either by understanding more about seismic hazards and geophysics research, or by improving my programming ability, especially in regards to Illustrator or GMT. I haven’t touched Illustrator all summer and though I’ve used GMT, the script has always been prewritten in a C format, which I find easier to understand. Perhaps this semester I’ll have to work more on both of these programs, as well as others, when trying to put together my poster, but, as of now, they haven’t been touched. In terms of the ‘larger picture’ of our research, I do understand better how the work we’re doing can be used in more applied, practical situations. Two of the lines we did were for outsiders interested in water resources or liquefaction, which I wouldn’t have expected at the beginning of the summer. Plus, I was able to look a bit into what some of the other scientists in the Earthquake Hazards program were working on and it’s really helped my understanding of what exactly geophysicists do! My only regret is that I didn’t reach out more to some of the other departments and centers here in Menlo Park, given how wide spread my interests are. That’s definitely something that I will work on if I ever participate in similar research projects in future summers.

So, all in all, it’s been an incredibly instructive and fun summer. Since I’m only a rising sophomore, I can hardly make any concrete decisions about what my plans are after graduation, but this has been a good opportunity for me to get a better feel for scientific research. Although I may not stay in seismology, it’s definitely convinced me to stay in geology and geophysics and it was one of the best possible experiences I could have hoped for following my freshman year!

Week Ten- one more to go!

August 13th, 2012

So technically I’ve entered the realm of my final week here, but it’s been pretty hectic so I never had a chance to write a blog for last week! On Monday I had the pleasure of trying to get an abstract in for internal review here at the USGS. I had had two people review it over the weekend (since the deadline was noon on Monday), including my advisor, but then I was informed at 11:50am while checking through the abstract one last time with my advisor, that neither he nor my other reviewer could actually review it because they were also co-authors on the abstract (which had totally slipped my mind given that this was just internal review). So I began wildly searching the office for two new people to look at it (which was surprisingly difficult given that half the people were busy reviewing someone else’s abstract, and the other half were out at lunch). But finally I was able to get it to not two, but three reviewers who all had it back to me by mid afternoon. No one seemed particularly stressed out about the noon deadline, so that helped calm me down while I made corrections and then went through it one last time with my advisor. Finally, by the very end of the day I was able to give it in for internal review. They came back and approved it on Wednesday so I was able to submit it to AGU with a bit more time to spare than on Monday!

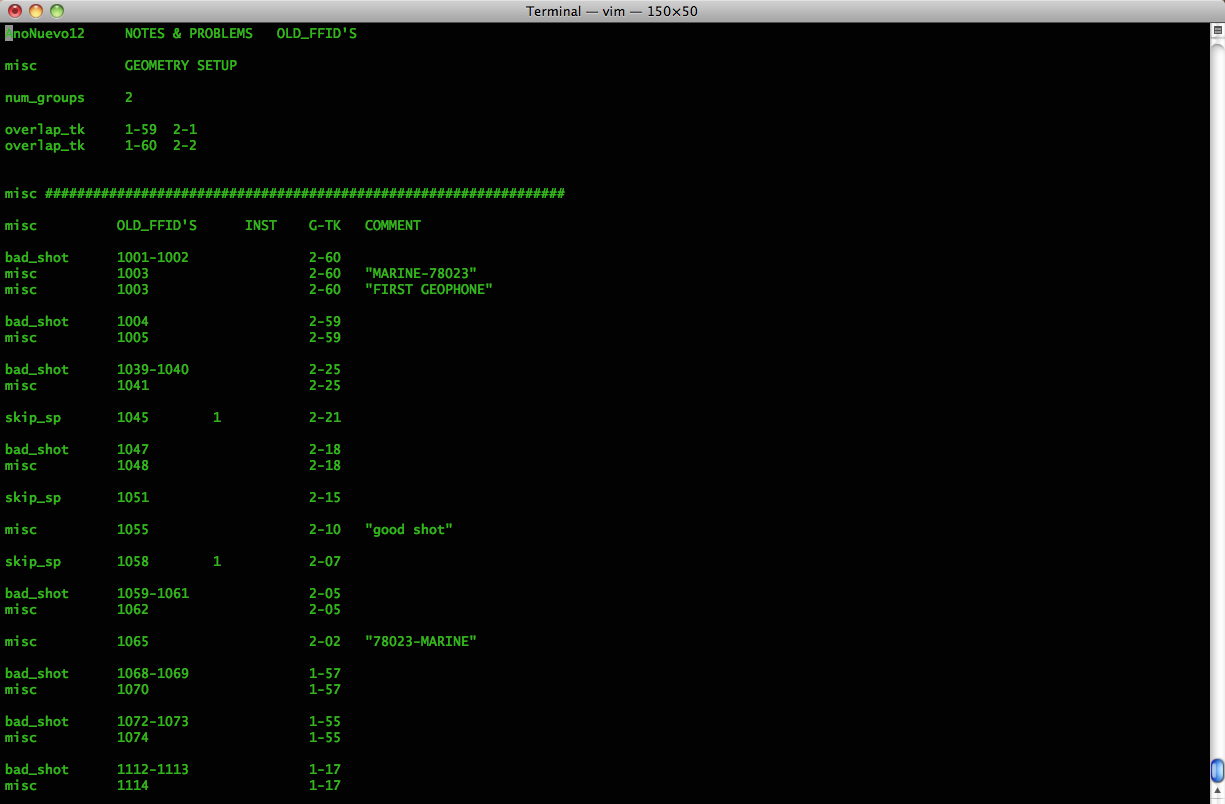

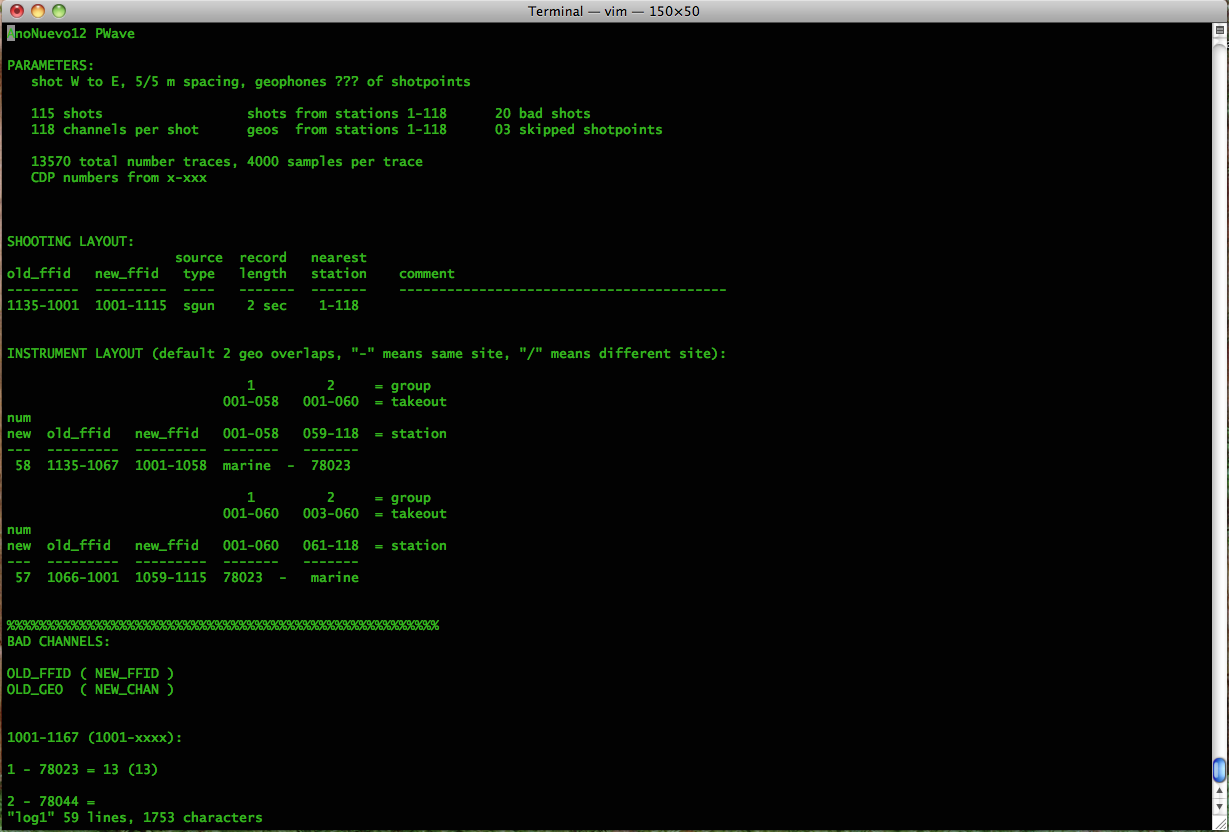

I ran a few more migrations at the beginning of the week, but they were still not very satisfying so Rufus recommended that I just move onto S-waves rather than worry too much about it. As I mentioned last week, the velocity model has to be perfect and we just didn’t get data deep enough to make any solid conclusions about the model at depth. So in the abstract, I left it at stacking. Perhaps, once I’m back at school, we’ll be able to look at the migrations again and possibly get them worked out, but in the meantime I’m just not worrying about them. So, instead, I started looking at S-waves. With four days left here (my last day is on Thursday), I doubt I’ll get anywhere close to finishing them, but at least I can have them set up so someone here at the office can get right into picking, and not have to worry about pre-processing, once I’m gone. The analysis steps for S-waves are similar to P-waves, but slightly more complicated and immensely more tedious. Instead of 135 old FFIDs (original shots), I now have 489 (it’s still 118 channels, but we took at least 4 shots at each geophone). Like with P-waves, I had to create fake shot files and input a fake geometry (though this time actually based on all 118 channels, instead of 60 like with P-wave fake geometry) into Promax. I then had to create multiple log files to also input into Promax that used the field notes to label which shots were bad, which had to be stacked, and which were skipped. I also had to keep track of when the direction we were hitting the block changed in each set of points so I could then reverse the polarity of half the shots on Promax, so they all had the same polarity when I stacked them. Now I’m just in the process of checking through all 489 shots to find bad traces, as well as shots that look out of place in each set. Needless to say it’s going to take me a bit longer than it did for P-waves! Then I’ll have to really stack everything on Promax and shift all the shot points up, like I did with P-waves, and then I’ll be ready to pick, which given how noisy the data is, should be another challenge to look forward to this week.

On Sunday though, we had a slight change of pace and went back out to do some fieldwork. I’ll admit, being at work at 7am on a Sunday wasn’t great, but it was nice to get out into the field again, even if this time we were going to a dump! The fieldwork took place at a landfill on the East Bay and took us all day. Apparently the landfill is slowly slipping into the bay (all that sits between it and the bay is a salt marsh that I believe is also a wildlife refuge). So a geotechnical company is going to put in a metal wall to act as a levee between the dump and the bay. But first they want a survey done to get a sense of what the subsurface looks like. We ran a 300m line along the edge of the landfill and then a 60m line, perpendicular to that, up a steep slope of mulch that undoubtedly overlain trash. However, being right next to the marsh and the bay, the view was really nice and the smell was completely bearable. Besides having to dig through two feet of mulch on the hill to actually reach dirt, it was a nice afternoon and we were able to get done pretty quickly. It was a nice way to spend my last weekend before I finish up work here!

Week Nine

August 6th, 2012

Last Friday I officially finished my ninth week at the USGS. I just can’t believe that the summer is coming to a close and I don’t know how ready I am to go back to school in only three weeks! Fortunately, my internship will continue for another two weeks, which is good given that I can’t remotely access the USGS server so everything needs to be done by the end of the summer. Rufus says we can work together on the poster remotely while I’m at school, but all data analysis and interpretation needs to be done by the end of summer.

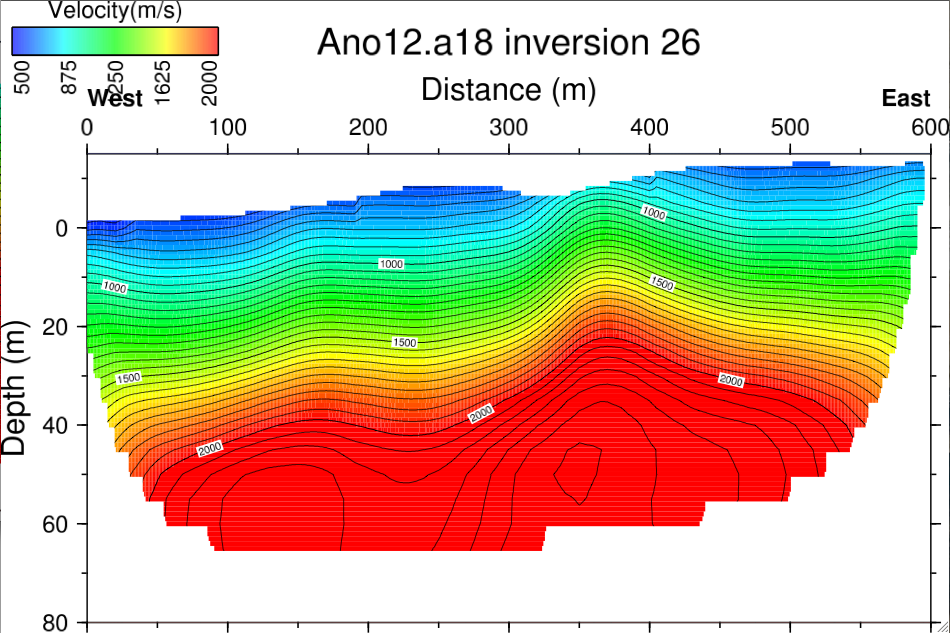

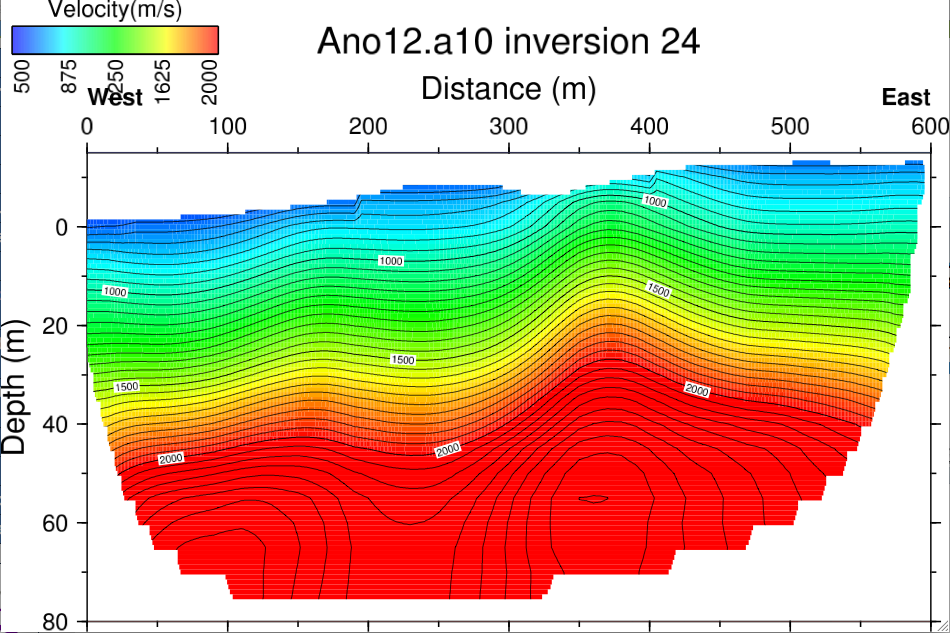

Last week, I generated a bunch of velocity models (examples below) and went through them all to pick the best one. I created one or two that used all of my picks in the inversions, without killing any, in order to compare what the model looks like with and without killed picks. For the most part there wasn’t too much difference, which is satisfying since it means none of my picks (even those that I killed) were terrible. In the end I chose three models that I think were best. When looking for the best model, I had to look for a version with the least number of killed picks, the model with the highest iteration number, and the model that goes deepest. The deepest I was able to get was only about 70m, but I was able to see a clear low velocity zone that corresponds to the fault strand we expected to find.

Once I had my models, I had to extend them down to 5000m so we could stack and migrate them. In reality the extension is a fluke since you have to make up what you think the model looks like below what you can see. In the stacking it doesn’t make too big a difference, but in the migrations the velocity model needs to be close to perfect or else the migrations don’t come out properly. So for the stacking, I used a simple code that set the 2800m/s contour line at 175m, with data points at 5m spacing, and then extended that straight line down exponentially, with data points at 100m spacing, to 5km. Then using Promax, I created a stack for each of my best velocity models. I ended up with four stacks though, since I messed around a bit with the stacking parameters (stacking at a higher bandpass filter etc…). In the end most of the stacks came out really well. We could clearly see the west-dipping fault that we saw on the velocity models and it even looked like we were going to be able to see a few other fault strands, some of which showed a reverse component of motion, which is important given how reverse motion can effect earthquake shaking. All around, I was pretty pleased with the stacking results and felt confident writing my abstract. I would have liked to include information about migrations in the abstract to, but I wasn’t able to get the migrations to work last week. Because the program (which is a pre-stack Kirchhoff depth migration) is very sensitive to the velocity models, the process of virtually extending the model down arbitrarily meant that the migration results just weren’t as clear as the stacking results. I had to spend a while trying to figure out the code that extends the velocity model so I could manipulate it to carry down, for instance, the low velocity zone where we believe the fault to be, to more than 70m depth. This week I’m going to try my new velocity models to see if that helps the migrations at all.

Last week I also finished up my abstract. Although they’re only due on Wednesday for AGU, we have to submit them to USGS on Monday for internal review. It’s apparently quite a process and unfortunately I was only clued into this fact on Friday afternoon, but I was able to quickly find two reviewers to look over it during the weekend and hopefully in the next hour and a half I can get all the paperwork together to submit it officially to the science center director! If all goes well in that department, I’ll be able to try some new migrations, and start working on putting together bits and pieces of my poster!

Week Eight

July 30th, 2012

Another week has gone just flying by, though I think I’m really starting to make progress, especially since first break picks are finally completely done. After I had done my picks last week (and redone them, two or three times!), I exported them from the ProMax database into an Ascii file on the terminal. I could then use a KILL file I had created listing all the traces I hadn’t been able to pick/ places I was unsure of my picks, to actually remove any of the bad picks from the Ascii file. Then using a premade code, I went through this file and did four different checks, including reciprocity, to quality control my picks. And of course, after I completed each test, I would have to go back and fix the database, reexport the data and run the test again. Needless to say, I was crossing my fingers for it not to give me any errors each time I ran the tests! The first test listed any picks that were less than or equal to one and picks that were above a maximum value I input. The second test listed any picks that differed from their neighboring picks by more than a certain max amount. The third test listed picks that decreased (the time became shorter) with increasing distance from the shot. Even if one pick was just a millisecond above the one before, it could really throw off the data given that it would be physically impossible! The final test was the reciprocity test that I mentioned last week. This would go through and compare the travel time for a shot between the shot point and another geophone and the time for a shot to travel from that other geophone to the initial shot point. It was inevitable that I had a few that were off, but as long as they were under 10 milliseconds or so, it wasn’t a problem. It didn’t take too long to fix most spots, but of course once you change one set of picks, you have to go through to make sure it corresponds with all the following picks.

The next step was to invert the first picks for the refraction data and turn it into a suitable velocity model. Making the inversions isn’t too difficult, but it takes the program (written by fellow IRIS interns Amanda and Rachel’s mentor) a while to run. One has to also create multiple versions as well, each with slightly different inversion parameters- initial velocity model, grid size, smoothing parameters etc…. Unfortunately, the GPS readings from the line are all off because of tree cover, so I haven’t been able to input the real geometry yet for the line. But, because the inversions need elevation data, I had to trace out my line the best I could on Google Earth, record the elevation at points 5m apart, convert elevation from feet into inches, and then turn the raw elevation data for each point into distance above the lowest point. It’s not a perfect method, but it does let one add a sense of the topography into the inversions. Once the inversion program finished running, I can run a GMT program that turns the inversions into an actual image of the velocity model. Each version produces 30 or so iterations and so I’ve had to go through and choose the best iteration for each version and compare them to get the ideal velocity model. However, I realized last Friday that I had reversed the elevation data so it ran East-West, while my shot point data runs West-East. That meant I had to go through and recalculate the elevations on Google Earth and rerun the inversions.

I would have then gone through all of my velocity models on Friday, but I had to leave that until today since I left work early on Friday to drive up to Yosemite for the weekend with my family. I’ve been in California 3 years and have never made it up, but needless to say it was an AMAZING weekend! Of course it made getting up early again this morning for a Monday at work quite difficult!

Week Seven

July 20th, 2012

I can’t quite believe seven weeks of my internship are already done! I still have four weeks left, but I feel like the half waypoint of the summer just kind of flew by. Things have been pretty slow this week, though looking at my action plan for the rest of the summer, I know it’s sure to pick up by next week. Since you can’t remotely access the USGS server, unless you’re on a USGS laptop, I have to get all of my processing, interpretation and figure making done by the end of the summer. I’m not going to worry at all about my poster until I’m back at school in the fall. Abstract deadlines are also coming up, so I really want to get through a lot in the next two weeks to help add substance to my abstract.

As I mentioned, this week was quite slow. I was picking the entire week essentially. I’ve never picked first arrivals before, so it was a pretty tedious week spent mostly going over my own mistakes. I finally got through all of my shots this morning, but now I’m just going through them again to make sure they all make sense as a consecutive series. Hopefully, then this afternoon, I’ll be able to learn a bit about the next step in processing- reciprocity. To improve and check the first picks, we use the basic physical fact that the time taken to travel between two points is the same, no matter which direction you come from. We can check the first picks for one shot by looking at the time it takes the energy to get from geophone A (the shot) to geophone B and compare it to the time it takes energy to get from the later shot at geophone B, back to geophone A. Hopefully, between that and killing any traces I couldn’t pick, I will weed out most of the bad picks (because there are a few!).

In the meantime, I realized I never looked back at the goals I mentioned in the first two weeks. Now that the half way mark has come and gone, I decided it might be a good idea to go back briefly to some of my primary goals.

“I know I’m going to be using a program called ProMax, as well as a lot of basic Unix so a large goal I have for myself is just to become comfortable and self-sufficient in both, given that I don’t have a strong programming background.”

I’ve definitely worked on this one a lot and I feel a lot more comfortable with both Unix and ProMax. I’ve learned to find mistakes and fix them myself and I have a better grasp on what specific flows and codes mean.

“On my final product, I know Adobe Illustrator and GMT will be involved so hopefully I can figure those out as well.”

I haven’t touched Illustrator or GMT all summer, but I know I’m still going to have to. One of the guys in our office has codes in C that will create GMT scripts, plus Dulcie has been willing to let me look through some of her scripts to get a better grasp on GMT, so hopefully, I’ll be okay on that front. With Illustrator, this may be something I have to figure out when I’m at school and trying to throw together some maps for my poster.

“I want to become more comfortable with doing fieldwork and learn to understand all of the little details that go into both planning and doing real fieldwork.”

I think I’ve also had success with this one. We went out 4 or 5 days (and may be going out a bit more in August) and I’ve become familiar with all of the tools we use, what each instrument does, and how to take care of and install the instruments. Plus, for two of those days I was considered the PI, so I had the opportunity to put together and organize a field team, which was an interesting experience when everyone is years older than you!

“I want to be able to understand how this kind of work can actually advance our understanding of seismic activity and fault hazards.”

I haven’t gotten to interpretation yet, but looking through past papers and posters and making an action plan for the last few weeks, has helped me understand why we do each step in the processing. I’ve also gotten a better grasp, I think, on why the USGS does this and what results they get from these surveys that they deem worthy of being released to the public.

Week 6

July 13th, 2012

This week I’ve continued with the (pre) processing of my data set and I’m finally starting to get the hang on using the Vi editor and ProMax. I’ll admit the week did get off kind of slowly and I ran into a few problems, mostly caused by my own carelessness. On Monday, we had a power outage and then the madmax server (which we need to get onto ProMax) went down and so I have to wait a few hours for that to get rebooted. I did spend some of the time talking to my advisor though about creating a working plan, now that the preprocessing is finishing up, of things that I want to do to the data and what I hope to get out of it. I’ve been going through a few more of my advisor’s papers and papers published by the team here, as well as AGU abstracts they’ve written, to get a sense of the usual processing steps. It first involves getting through the pre-processing and then through first break picking, but from there I will have to migrate and stack the data, use reciprocity to make up for bad and skipped shots, and possibly use deconvolution to improve the resolution of my images. I think I understand all of the concepts fairly well, but we’ll see how it goes when I actually have to start applying them using ProMax flows! It was useful though to get a sense of what I need to get done in the next five weeks, and also start thinking about what kind of images and what texts I’m going to want on my poster.

Finally I was able to get back onto madmax and learn the next few pre-processing steps. On Tuesday, I ran into a wall when one of the flows kept freezing on me, but it was soon pointed out to me that the file I was trying to import was merely empty (the program I used had malfunctioned and not copied anything to the file). Then I kept getting erros on another flow, but soon realized it was only because I was using the wrong instrument number. And then of course, I spent a while looking at the output file of one of the flows responsible for removing repeated shots, before remembering that I didn’t have any repeated shots and that the output file was supposed to be essentially empty. Those little silly mistakes have been a lot of what have been slowly me down, but once I got through those, I was able to get the fake geometry all set up on ProMax and then get rid of any bad shots from my data. Technically, once the fake geomeotry was done and the necessary files were imported onto the system, I was supposed to upload the real geometry that is based on the actual coordinates of the geophones. But since I haven’t gotten that file yet from our GPS guy, I had to upload a new ‘less’ fake geometry. It still uses the spacing between the geophones as the coordinates, but this time it bases the geometry on all 118 stations, rather than a standard 60 stations. I had to then go through all of the same uploading and set up steps from before, and this time run a flow that combined and resorted the data from both RXs together.

At that point, I needed to artificially move up every shot to the same time to compensate for any variations in shooting. To do this I just had to run a few more flows and ProMax code to create a spread sheet of the amount of time each shot needed to be shifted (we wanted every shot to be as if it had taken place at 2ms). I then had to read this spreadsheet onto ProMax so it would shift all the shots. In the same flow, I was also able to kill all of the bad traces I had found previously. This flow also did automatic first break picks on the trace each shot originated from. With this I could make sure that the first picks looked good, that the polarity (the direction the geophones were facing) of the traces were all the same, and make sure the shot was recorded at the correct geophone. Once all of these checks had been done and the shots had been shifted, I was able to begin the next flow- first break picking. I have quite a lot of shots and my data is quite noisy, but hopefully I’ll be able to have picked all my first breaks for the P-wave line by early next week.

Of course I ran into lots of little problems along the way, but it’s amazing how much better I’ve become at actually identifying and solving the problems myself. I’m also a lot better at understanding what the actual issue is when someone points out a problem to me. So it’s still slow going, but I would say I’ve definitely made progress!

Week Five

July 10th, 2012

Last week I continued with the preprocessing of my data set in ProMax. Because we shot the line backwards (they like them to run W. to E.) and the line was long enough that we had to use two RXs and two cables of takeouts, it means there are a lot of little things I need to get my head around first, while using ProMax, which makes the process move a bit slowly. Again, at the beginning of the week, I sat down with the main ProMax guy on our team and took notes as he went through his own data set. We also went through the log files I had made the week before to confirm that there were no mistakes. On the files, I have to keep track of which stations are on which cable, how the new FFID numbers match up to the old, and how many takeouts we want to be read by each RX (when we switch over to the other cable, we also switch the placement of the RXs and what they’re recording because one of them is apparently much better than the other). That process was relatively straightforward, but involved a lot of thinking about how the RXs were placed and how the switch, midway down the line would have affected which stations were being recorded.

Once the log files looked good, I was able to get onto ProMax and go through all of our shot gathers. For each old FFID (which corresponds to each shot), I had to go through the shot gather and find bad traces (which would correspond to bad takeouts). I had to do this for all 135 shots on the one RX, and then for all the shots around the middle point for the other RX (because it is less sensitive the data is not nearly as good from this instrument). Once I had a sense of bad traces, I began looking at receiver gathers. These look a lot like shot gathers, but instead of showing every trace for each individual shot, it can show you every shot recorded by each individual trace. I then through all the traces I had thought were bad from the shot gathers four times- on each instrument I had to look at the receiver gathers for before and after the switch independently. From these receiver gathers, I could get a sense of the range of shots over which the traces were bad and make note of which traces we were going to ignore for which shots.

Once I had made another log file with all of this information, I was able to begin putting the information from the log files onto ProMax. To begin with, I just have to use a fake geometry, which calculates the coordinates of each geophone from the geophone spacing. The first geophone is arbitrarinly taken as (0,0), and then each subsequent geophone moves 5m down the x-axis. Eventually I will get a file with the real coordinates of each shot point (found using GPS), and input this into ProMax. In the meantime, I used a few programs already on the system to create a few basic shot files that take note of how many old FFIDs, stations, and new FFIDs I have. I then input this onto the ProMax server using a series of flows that have to be done independtly for each RX instrument. Once this information has been fully input (right now there’s a few inputting commands I need to find and put into the ProMax system, which should have been set automatically), I will be able to check that there are no strange offsets recorded on any geophone (which could mean the geophone moved during shooting) and I will be able to remove any bad traces and shots, as well as put in blank signals for any skipped shots.

And then of course, happy late 4th of July to everyone! I can't say I did anything too exciting besides a barbeque in the backyard with my family, but it was definitely nice to have the day off!

Week Four

July 2nd, 2012

Last week was interrupted by more fieldwork right tin the middle, but I did finally start to get looking at real data. On Wednesday we went back to Point Año Nuevo to do another seismic survey on the San Gregorio Fault. We were slightly further north than last week, and this time we were actually on private land, on the historic Cascade Ranch. The farmers let us do the survey right through their land, partly because they’re actually hoping to use the subsurface images we produce to precisely locate the water table in order to dig more water wells for the farm. We used the same procedure as last week and our line was again approximately 600m, but this time we did the entire data collection process- S and P waves- in one, very long day. On a side note, the day before we went out I had another learning experience when one of the government trucks we were driving to the warehouse died on us right in the middle of an intersection. Between the survey and the warehouse we had to get out 2 or 3 times to push the truck and jumpstart it. Most of Tuesday afternoon was just spent getting this car to the warehouse, loading it up, and waiting for it to be fixed so we could get back to the survey. It has made me realize how much time, in a job like this, one actually spends on logistical issues rather than data analysis!

Then finally on Thursday and Friday I could begin the data analysis process. To learn the process, I sat down with the main computer guy on our team and watched as he started processing the data from a line the team did a few months ago over in Berkeley. Then, from the notes I had taken watching him, I began the pre-processing steps to turn the raw data and field notes into actual workable data (a process I’ll be working on for a while). The first step is to backup the raw data from the RX machines we use in the field onto a disk, during which time you also have to turn the many files the machines created in the field (each shot is recorded as its own file) into one larger file. From there, it can be put on the computer and entered into the ProMax system in a SegY format.

Once the data is actually on the computer, one still needs to go through all the field notes before even looking at the results on ProMax. In the field our main tech guy takes down each shot and records at which takeout it was and then makes note of when we had to repeat shots, skip geophones, or simply when we had bad shots (the instruments record and save a file for every shot, even the bad ones). My job was then to put the field notes into a log file that, using some preset syntax, notes when the shots are very good, when they’re bad, when we skipped something, when a takeout was offline etc… It seems pretty simple, but you have to be careful that all of the takeouts and geophones recorded are correct. Plus, we do all of this on the Vi editor on the terminal, which I didn't know at all beforehand. It’s not too tricky, but it did take a while trying to input all of this data when I’m still learning the commands for the editor. From there, I used a pre-set code to turn that log file into another one that gives each shot a new FFID (each shot, even the bad and repeated ones, is initially given an FFID number that begins at 1001), by eliminating bad and repeated shots. In my case I also had to reverse the order of the FFIDs since the survey lines conventionally run west to east, but we shot east to west. The program is useful for creating a template for the file, but in reality I had to spend a few hours manually updating it so it counted stations, channels and takeouts correctly and didn’t throw out the wrong shots. Finally from that log file, I created a third file that shows the instrument layout (there are two RXs in the field), the shooting layout (if we had changed shooting type), and the data parameters. Again, it’s pretty straightforward but is pretty tedious and involved a lot of number crunching and checking. There’s a million different ways to make sure you’re on the right station or takeout, but once you do find a mistake, it always takes a while to fix it!

Those are all the preprocessing steps I was able to get through at the end of last week and so hopefully tomorrow I’ll be able to learn and implement a few more. There’s about 24 distinct steps to preprocessing and data input that I have to go through before I can even start picking data, but fortunately each day becomes a bit easier as I become more comfortable with the terminology and computer programs. Below I’ve attached two screen shots of what the log files look like.

Week 3 Fieldwork!

June 28th, 2012

Sorry this is coming so late, but it's been a pretty busy few days and I've only just gotten around to posting this (though I wrote it a few days ago!). Week 4 has had a bit more fieldwork, so once I have a second I'll be writing about that!

So my third week of the internship is through and I’ve already almost done with my fourth week. It’s pretty amazing how fast time is moving and it makes me realize that 11 weeks really isn’t that long! This past week was incredibly productive and I was able to spend most of my time in the field, which was spectacular. On Friday the 15th, a bunch of us went north to do fieldwork for the Master’s thesis of one of the students in the office. We went up to the Filoli Center, which is this beautiful old estate, about 25 miles south of San Francisco that was built in the early 20th century (after the 1906 earthquake of course). The gardens and the house itself, which are open to the public, take up about 16 acres, but the entire estate is over 650 acres. It’s located right off Highway 280, nestled along the foothills, near Crystal Springs Reservoir. Most of the estate, outside of the gardens, as well as most of the land in general situated west of 280 along the foothills is controlled by the SF Public Utilities Commission, and so not open to the public. But we got permission to go onto the back roads of the estate to do a seismic survey right over the San Andreas. The line was only 60m, but we had 1m spacing for the geophones, so it still took us the better part of a day. We also had to do both P and S waves. For P waves, because the line was short, we would just swing a large sledgehammer onto a metal plate, wait 2 seconds, and then move on if Rufus determined that the data propagated well down the entire line. For S waves, we had to use different geophones and a larger, solid metal block. Someone would stand on top of the block and people would have to hit both ends of it. I’ll admit, it was pretty nerve wracking at first to see people swinging a large sledge hammer right towards someone’s ankles, but we had no injuries all week! All in all, it was a long, but productive day. It was also great to get out of my cubicle and spend the day outside in such a beautiful setting.

The next week, we got to go out again, this time to do my fieldwork. We were using 5m spacing this time, but the line was 600m, so we still had 120 geophones. Between P and S waves, it took us two whole days to do it. Fortunately we had a lot of people helping out, including Lily and Eva- two other IRIS interns working in the area- so the work went a lot faster than I expected. The process was pretty much the same as on Friday, but this time for P waves, we used Betsy-Seis guns, instead of a hammer. To use the guns, you basically have to dig a hole next to the geophone and then place this gun, which honestly looks more like a pogo stick, into the hole. At the end of the gun is a shotgun shell (all blanks). Then you fill in the hole, release the safety, and hit the top of the ‘pogo stick’ with a hammer. That triggers the gun and it shoots the blank into the ground. In the warehouse on Monday we grabbed the wrong shells, so they were a bit too small to propagate down the entire line, but Rufus was still confident we would get clear data. Because we had to curve the line slightly, I’ll have to analyze the line in 3D, rather than just 2D, which should take a bit longer, but isn’t too much extra work (so I’ve been told). This time we were working on the coast at Point Año Nuevo, just north of Santa Cruz (the park is famous for its elephant seals). Besides the poison oak, it was also a great place to work. The fog never came down on us, so it wasn’t too cold and we could both see the ocean and hear the seals from where we were working. Our main tech guy is going to show both Carla (the master's student whose work we did on Friday) and I how to properly download the data from the disks onto the ProMax program, and then I’ll be able to finally start looking at data!

On Thursday, on a side note, I also got to go on a fieldtrip. It started as just the new interns and employees in the office, but by the afternoon, almost everyone under 30 in the whole office was there. We went to Hayward, on the east bay, in the morning to see the Hayward Fault where it runs through the city. And then in the afternoon, after a team barbeque for lunch, we went back to Highway 280, to the Crystal Springs Reservoir, to see the San Andreas. It was a less obvious and involved a bit more hiking on the edge of the hills, but we got to clearly see the dip in the land that is the fault. We also got to see a fence that had been clearly displaced by the 1906 earthquake. It’s one of the last sites where you can clearly see displacement (that hasn’t been recreated) from 1906.

It was definitely nice to spend so much time outside this past week, but hopefully, now that I'm getting a better feel for the actual computer analysis process (through looking at a lot of Rufus' old data and papers), I'll actually start to look at my own data soon!

Week Two!

June 14th, 2012

Not too much new to report this week, but we are getting ready to head out tomorrow to do some fieldwork, which should be exciting. Most of this week has actually been about preparing for the field. At the beginning of the week my advisor and I had to drive up to Redwood City to the county office to find out who owns the property we want to work on. Then we had to drive up to Moss Beach, on the coast about 1hr from here, to scout out the area we want to work (mostly to make sure there’s no poison oak in the area!). On Wednesday I had to head up to San Francisco to the federal building to get my fingerprints and photo taken so I can officially be granted access to the USGS. No one in the building seems to mind me being around, but officially I need to go through a background check and get an actual USGS ID. Both processes (getting the ID, being cleared by the Department of the Interior) should take a few weeks, but fortunately I already seem to have access to most systems and resources anyway.

Today a few of us from our team then had to head up to the warehouse about 10 minutes away to load up the truck for the field tomorrow. Tomorrow’s work has nothing to do with my project, but rather is for the Master's thesis of one of the students in the office. We’re heading up to the Crystal Springs Reservoir, where the City of San Francisco stores its water, to do a 60m line over the San Andreas. Although it won't be as hot as it was in Socorro when we were doing the survey line for orientation, it'll be interesting to see how my first real day of fieldwork goes. I know in that area the temperatures can fluctuate from 50 to 85 degrees in one day and apparently it's just luck whether your site is infested with poison oak or not. Plus, besides orientation, I've done very little actual fieldwork so it should be an interesting day! But I know it will be great practice for next week when we'll get back out into the field to do a much longer line (potentially up to 600m at 5m spacing!) for my project. Our initial plan was to head up to Moss Beach, north of Half Moon Bay, and do a survey over the San Gregorio Fault (which is the westernmost fault system in the larger San Andreas system). Unfortunately, I’ve been working all week to get permission for that project and it still doesn’t look like we’ll have all the proper permits for landowners in the area in time. So instead we’ll be heading south to Ano Nuevo, just north of Santa Cruz and Monterrey Bay, to the other point in which the SGF comes on shore. It’s less important from a hazards perspective as there are very few people and very less infrastructure in the area, but the SGF is still one of the least understood fault systems in the area so anything we can do to enhance our understanding is good. Plus, because most of the fault runs offshore (which is why so little work has been done on it compared to the San Andreas fault), there's a greater risk of tsunamis and landslides.

In the meantime, besides hunting people down to get permits and trying to assemble a team to go into the field next week, I’ve just been doing a lot of background reading on my site (given that we’ve changed the proposed site 3 times, it’s been a lot of reading!) and some of the methods we’ll be using. I’ve also gotten all the necessary programs downloaded and set up on my computer. I have a ProMax manual I’ve been looking through and I have access to the ProMax system, but its hard to really get a good grasp on the program before I have real data to work with! I've also been helping edit papers for some of the scientists in the team (mostly for grammar and language, not content). It's been a great way to see more of the research being done in the office and to get a feel for the publishing process.

I mentioned some goals last week, but just to continue with that:

I want to become very confident with all of the programs used in the office, ProMax especially. Doing the geometry for a survey line seems to be the most complicated part so I want to become very comfortable with understanding how to turn the raw field data into workable ProMax geometry.

Going to the warehouse today I realized just how many different tools and machines are necessary for each field excursion. I want to get a better grasp on what each tool does and when it’s necessary or not, in order to help me lead an actual survey independently (which is what I’ll have to do next week when we go into the field for my project).

I’ve looked a bit at examples of the final products (papers and posters) my advisor and his team has produced from these types of surveys. I know the current plan is to make an image of the fault zone and then use seismic tomography to understand the composition of the shallow crust under the fault. But, I want to be able to understand how that kind of work can actually advance our understanding of seismic activity and fault hazards. The interpretation part of a survey seems to me the most interesting part of all the work and so I want to really understand (and feel confident in) how one uses the image profiles produced by a survey to establish and understand the rupturing history and potential of a fault.

First Week at the USGS

June 8th, 2012

I’m sitting in my cubicle, having just arrived for my fifth day of work. It’s quite convenient being placed in the town over from my own, so each day I get to hop on my bike and make my way between the USGS and my home. This first week has been pretty low key and focused more on settling into the office than doing actual research. Thanks to the joys of government bureaucracy I feel like I have a new piece of paperwork to fill out each day and next week I have to head up to San Francisco to the federal building so I can actually get access to all of the USGS’s resources. In the meantime, I’ve just been trying to get to know people around the office, set up my computer with all the necessary software, and do lots of background research for my field site. There’s still some question about where I’ll be working exactly, but we have a few options in the area. An interesting part of scientific research that I’ve been able to experience thus far at the USGS is all the work that goes into planning field excursions, including getting permits. Hopefully though, I’ll be able to get out into the field by the end of the next week or early the week after that! That way I’ll have as much time as possible to process all the data, as well as help out on the side in all the other work my office is doing.

This summer I know I’m going to be using a program called ProMax, as well as a lot of basic Unix so a large goal I have for myself is just to become comfortable and self-sufficient in both, given that I don’t have a strong programming background. On top of this, for my final product I know Adobe Illustrator and GMT will be involved so hopefully I can figure those out as well. I also just want to become more comfortable with doing fieldwork and learn to understand all of the little details that go into both planning and doing real fieldwork.